cognitive approach to psychology Notes

Module 2.1: Concepts and principles of the cognitive approach to behaviour

What will you learn in this section?

- Assumptions of th Cognitive Approach

- The origin and the essence of these principles with reference to the history of cognitive psychology

- Introspectionism

- Psychoanalysis

- Behaviourism

- Cognitive psychology

- Behavioural economics

- Research methods used in the cognitive

Cognitive psychology is based on the assumptions that:

- Behavior is underpinned by mental representations, like schemas which allow humans to perceive and understand the world differently from each other. due to their differing experiences and knowledge of the world.

- The ‘hidden world of mental processing that behaviorism saw as incapable of study can actually be investigated by scientific methods, allowing a more sophisticated view of behavior than that gained through behaviorism’.

- A deeper understanding of human behavior is gained by combining the cognitive with biological and sociocultural approaches.

The contribution of research methods used in the cognitive approach to understanding human behavior Cognitive psychologists use several research methods that reflect the principles on which the cognitive approach is based, such as:

- The experimental method where an independent variable is manipulated under controlled conditions to assess its effect on a dependent variable. For example, Bruner ISL Mintern (1955) presented participants with an ambiguous figure that could be either a letter ‘B’ or a number ’13’. Those who saw the figure in between a letter ’A’ and a letter ‘C’, perceived it as a letter ‘B’ while those who saw the figure in between a number ‘12’ and a number a ‘14’saw it as a number ‘13′. This suggests that the perceptual schema influences perception by people seeing what they expect to see. based on previous experience.

- The case Study method where one person or a small group of people are studied in detail. For example, Scoville & Milner (I957) studied HM. who, as a result of brain surgery to treat his epilepsy could not create new long term memories though his short-term memory was long, which suggests we have separate short and long—term memory stores.

- Self Reports, such as interviews where participants answer questions in a face-to-face situation. For example1 Koblberg (1969) studied the development of moral thinking by giving participants from different cultures moral dilemmas (hypothetical stories where a choice between two alternatives has to be made based on morality]. l found the same sequence of moral development in all cultures. which suggests it is an innate biological process.

- The correlational method, where a relationship between variables is measured. For example, Schmidt et al (2000) found a negative correlation between the number of times Dutch participants had moved house since they were at elementary school and the number of street names they could remember in the school area. This supports the idea that forgetting can occur due to newly learned information fog. the street names of where you live now interfering with the recall of previously learned information fo eg. the street names around where you used to live.

- The observational method, when: naturally occurring behavior is observed and recorded. For example, Slatcher & Trentacosta (2011) observed that parental depression negatively affected children’s behavior and cognitive socialization, which suggests parenthood states affect childhood cognitive development

- Non-invasive brain scanning techniques are used to identify brain areas associated with specific cognitive processes. For example, D’Esposito et al of, (1995)used fMRl scans to find that the prefrontal cortex is associated with the workings of the central executive. The controlling system of the working memory model.

Module 2.2: Models of memory

What will you learn in this section?

- Atkinson and Shiffrin (1968): the multi-store memory model

- Sensory memory, short-term memory (STM), long-term memory (LTM)

- Duration, capacity and transfer conditions

- Baddeley and Hitch (1974): the working memory model

- The dual task technique

- The central executive, the visuospatial sketchpad and the phonological loop

Multi-store memory mode (MSM)

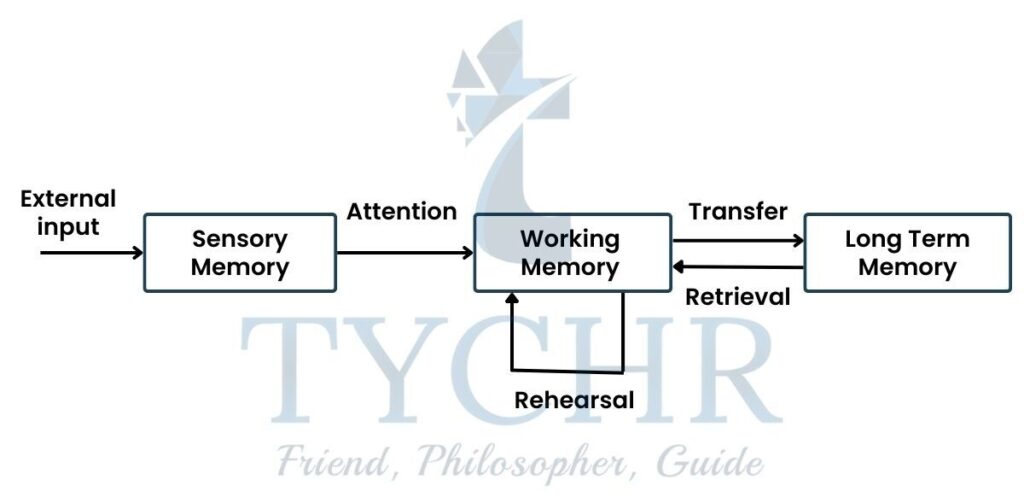

- Memory is a cognitive process used to encode, store and retrieve information. The multi-store memory model was proposed by Atkinson and Shiffrin in 1968. In this model, human memory is said to consist of three separate components:

1. sensory memory

2. short-term memory store

3. long-term memory store. - Each of these components is characterized by a specific duration (for how long the store is able to hold information) and capacity (how many units of information it can hold). In order for information to move to the next memory store, certain conditions have to be met.

1.) Sensory memory (SM)

- SM is not under cognitive control. but is an automatic response to the reception of sensory information by the sense organs and is the first storage system within the multi-store model

- Unprocessed information is stored in separate sensory stores for various sensory inputs:

- the echoic store for auditory information,

- the iconic store for visual information,

- the haptic store for tactile information,

- the gustatory store for taste information and the

- olfactory store for smell.

- Attention paid information passes on to the short term memory(STM); no lasting impression is left when the remainder dims rapidly through trace decay. The capacity of each sensory memory store is huge with unprocessed , majorly detailed and ever changing format information. For example, iconic memory can keep everything that enters our visual eld and echoic memory can hold everything that we acoustically perceive at any moment. Different Sensory stores have different capacities and capacity seems to decrease with age.

- There is limited duration in all sensory memory stores but the actual duration of every store changes. Each store’s different types of information degrade at different rates. For eg. Sensory memory lasts a short time. After one second of inattention, traces in iconic memory break down, whereas traces in echoic memory can break down after two to five seconds.

2.) Short-term memory store (STM)

- Short term memory (STM) temporarily stores information received from SM. The memory system is very activating meaning changing memory system as current information is thought about. STM differs from LTMi especially in terms of coding, capacity and duration and how information is forgotten.

Encoding, capacity and duration of (STM)

- original and raw information comes from the SM like in sound or vision and is then encoded (entered into STM )in a form that STM can more easily deal with. For example if the input into SM was the word ‘platypus’, this can be coded into STM in several 1says:

a.) visually – by thinking of the image of a platypus

b.) acoustically – by repeatedly saying ‘platypus’

c.) semantically (through meaning)- by using a knowledge of platypuses. such as their being venomous egg- laying aquatic marsupials that hunt prey through electrolocation. - STM has a limited capacity, as only a handful of information is there in the store. Research indicates that between 3 and 9 items can be held though capacity is increased by chunking, if given a collective meaning the proportion of the units of information which in storage can be increased For example, the 12 letters of SOSABCLOLlFBl may be grouped into four chunks of SOS/ABC/LOL/FBI.

- The amount of time that information remains within STM is within the limit of 30 seconds without losing. This can be extended by rehearsal l[repetition] of the information. which if done longer will lead to the transportation of the information in THE LTM where itl becomeS a more long-lasting feature.

3.) Long-term memory store (LTM)

- Long term memory (LTM) involves storing information over prolonged periods of time and is indeed lifelong with information kept for more than 30 seconds counting as LTM. All information within III—M will have originally passed through the sensory register (SR) and STM, even if may have gone through many sorts of processing along the way According to the research there are numerous varieties of LTM , such as semantic (what something means ) episodic (when something was learned) and procedural (how to do something), and LTM’s are not of equal strength. Strong Ylvis can be retrieved easily. such as when your birthday is. but weaker LTMs may need more prompting. LTMs are not passive (unchanging)-over a period of time they either change or blend with other LTMS. Thats the reason for the memories are not necessarily constant or accurate. Research also indicates that the process of shaping and storing LTM’s is spread through multiple brain regions.

Encoding, capacity and duration of LTM

- LTM takes a certain form through coding and they are stored the means by which information is shaped into representation of memories. The more deeply a stimulus is processed while it is being experienced, the stronger the information coding will be ( and the memory will therefore be retriable)

- Verbal material coding in LTM is mainly semantic (based on meaning) although there are other forms of coding too , with research indicating a visual and an acoustic code.

- The potential capacity of LTM is unlimited. Information may be lost due to decay and interference. but such losses do not occur due to limitation of capacity.

- Duration of LTM depends on the lifespan of the individual as memories can last lifelong : many elderly people have detailed childhood memories. If an item was originally corrected , it will have a longer lifespan in LTM and some can also have a longer lifespan based on skills instead of facts. Material in STM that is not rehearsed is quickly forgotten, but information in LTM does not have to be continually rehearsed to be retained.

- The main subdivision of LTM is into

- a. explicit (also known as declarative)

- it is easy to put into words

- Explicit LTMs are ones recalled only if consciously thought about

- Explicit memories are also often formed from several combined memories.

- Types :-Semantic and episodic

- b. implicit (also known as non-declarative)

- it is not easy to express in words

- implicit LTM does not require conscious thought to be recalled.

- Types :- Procedural LTM

- a. explicit (also known as declarative)

Overall evaluation of the MSM

- The MSM was influential and sparked interest and research because it was the first cognitive explanation of memory. resulting in a deeper comprehension of the memory’s workings thanks to the working memory model. The model is supported by cases of amnesia, or memory loss.

- There is a lot of research that supports the idea that the SR, STM, and LTM each have their own memory stores. Either the LTM or the STM abilities of the patient are lost confirming the distinction between STM and LTM memory stores.

- The sequential position impact (Murdock, 1962.) supports the MSM’s idea that STM and LTM stores should be different. A list first and last are more easily remembered than its intermediate words , words that start the list off. Words at the end of the list are remembered since they have been practiced and transferred to LTM. The recency effect is remembered since it is still in the STM. The MSM is primarily criticized for being simplistic thus it is presumptively a single store for STM and LTM. Numerous STM kinds have been found in research like one for spoken words and the other one for sounds and various LTMS such as procedural, episodic and semantic memories. Cohen & Levinthal (1990) believe. Memory capacity should be evaluated based on the type of information to be recalled rather than just by the quantity of information stored in it, some information is simpler to remember no matter how much to learn the STM disregards this.

- The three memory stores, as well as the verbal rehearsal and attention process , are how the MSM explains memory’s structural components. However, MSM overemphasizes structure while undervaluing process.

- Case studies of amnesia support the MSM. as retrograde amnesia affects retrieval from LTM abilities while anterograde amnesia affects the ability to transfer information from STM to LTM. They also support the idea of there being separate types of LTM. as generally not all forms of LTM will be affected. Lawton (2015) reported on Scott Bolzart an example of retrograde amnesia who lost access to his LTM(though not his procedural LTM) due to a head injury but could still create new memories by transferring STMs to LTM. Scoville & Milner (1957) reported the case study of HM who had brain tissue removed in an attempt to treat his epilepsy, and who incurred anterograde amnesia. Where he was unable to encode new long term memories. although his STM seemed unaffected. supporting the idea of separate memory stores. HM donated his brain to science on his death in 2008. I am 32 years old. Sacks (2007) reported on musician Clive Wearing who in 1985 caught a virus that caused brain damage and amnesia. robbing him of the ability to transfer STMs into LTM as well as some LTM abilities. However his procedural LTM was intact; he could still play the piano. though he had no knowledge that he was able to do so.

Work Memory model

- Research revealed some phenomena that did not fit well with the view of STM as a unitary system. These “uncomfortable” results came from research studies that utilized the dual-task technique. In this technique, the participant is required to perform two memory operations simultaneously, for example, listen to a list of words (auditory stimulus) and memorize a series of geometrical shapes (visual stimulus). If STM really is a unitary store, then it is mandatory for the two sets of the stimuli to interfere with one another which means the memory won’t be exceeded by 7+2 units of modality. However, it was found that sometimes when running multiple tasks at once had no negative effects on memory. For instance, drawing something does not interfere with memorizing an auditory sequence of digits.

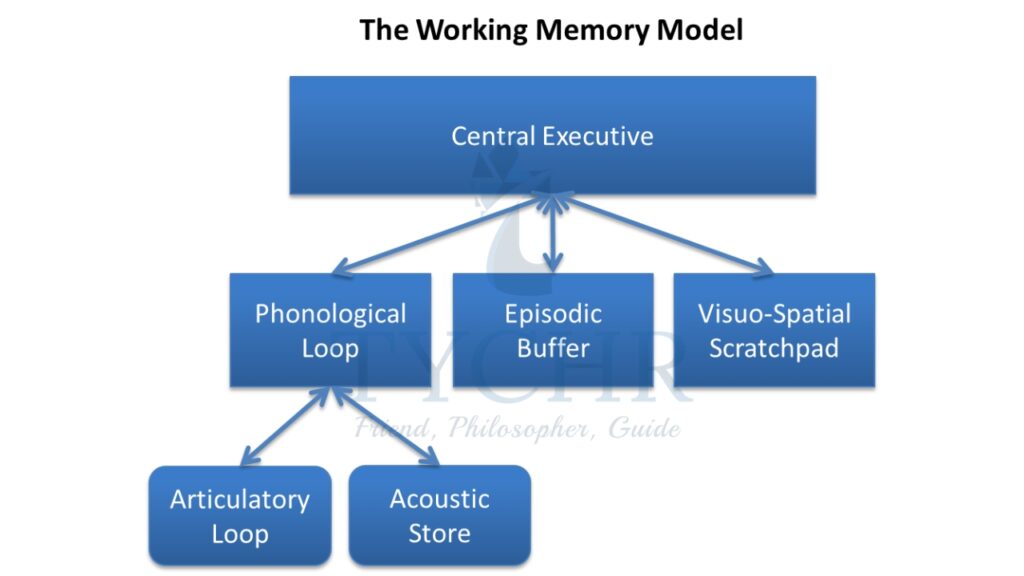

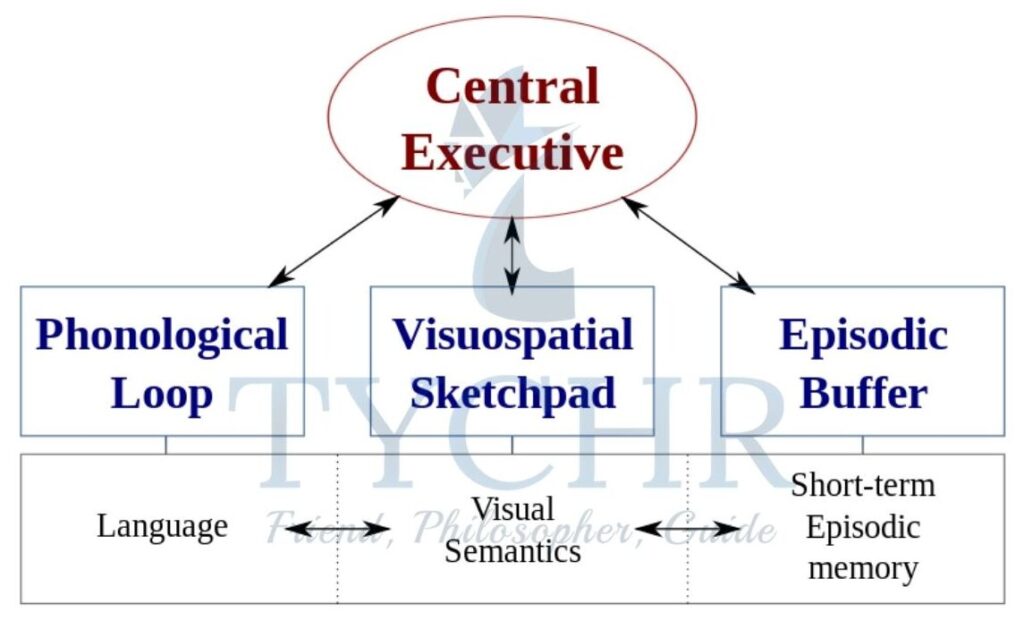

- To explain these conflicting findings, the working memory model was made and advanced by Baddeley and Hitch This model focuses on the structure of STM.

- In the original model, working memory consists of a central executive that coordinates two subsystems:

- The visuospatial sketchpad (“the inner eye”) holds visual and spatial information.

The phonological loop holds sound information and is further subdivided into the

a)phonological store (“the inner ear”) :- The inner ear holds sound in a passive manner, for example, it

holds someone’s speech as we hear it

b)articulatory rehearsal component (“the inner voice”) :- The inner voice, on the other hand, performs the following important functions.- First, it turns visual stimuli into sounds. For example, if a list is shown consisting of written words and we might enunciate the words we may subvocally pronounce these words, and by switching from a visual to an auditory modality, the words will enter our STM via the auditory channel..

- Secondly, it allows the information stored in the inner ear to be rehearsed. By constantly repeating the words increases the chances of transferring information further into long-term memory.,

- The visuospatial sketchpad (“the inner eye”) holds visual and spatial information.

- The central executive is a system which allocates resources between the visual spatial sketchbook and phonological loop.

- In this sense, it is the “manager” for the other two systems.

- In 2000 Baddeley and Hitch also added the fourth component, the episodic buffer, as a component that integrates information from the other components and also links this information to long-term memory structures.

The central executive

- The central executive (CE) is a supervisory system that acts as a filter to determine which information received by the sense organs is and is not attended to. It processes information in all sensory forms. directs information to the model’s slave systems and collects responses. It has a limited capacity and can only efficiently deal with one string of information at a time. Because of this, it selectively concentrates on particular types of information. when there are multiple things that need your attention, striking a balance between them. Take, for instance, speaking while driving. It also allows us to switch attention between different inputs of information.

1.) Phonological loop

- The phonological loop (PL) is a slave system that deals with auditory information (sensory information in the form of sound) and the order of the information. such as whether words occurred before or after each other. The PL is similar to the rehearsal system of the MSM with a limited capacity determined by the amount of information that can. be spoken out loud in about two seconds. As it is mainly an acoustic store, confusions occur with similar sounding words. Baddeley (1996) divided the PL into two sub-parts; the primary acoustic store [PAS] and the articulatory process {AP}. The PAS. or inner ear. stores words that have recently been heard, while the AP. or inner voice. keeps information in the PL through sub—vocal repetition of information and is linked to speech production.

2.) Visuospatial Sketchpad

- Inner eye or Visual-Spatial Sketchpad (USS). is a slave system that processes non-phonological information and serves as a temporary storage for visual and spatial items as well as their relationships (what they are and where they are). VSS assists people in navigating and interacting with their physical surroundings. with mental images being used to encode and practice the information.

3.) The episodic buffer

- Baddeley (2000) added the rambling cradle (EB) as a third slave framework on the grounds that the model required a corner shop to appropriately work. The processing and temporary storage of specific types of information is the focus of PL and VSS. However, since CE lacks storage and they have limited capacity, it cannot contain items related to visual and acoustic properties. As a result, it was introduced to demonstrate how CE, PL, and VSS anti LTM information can be temporarily stored together.

Module 2.3: Schema theory

What will you learn in this section?

- Introduction to schemas

- Types of schemas:-

- Social Schema

- Script schemas

- Self Schemas

- Gregory’s top-down theory

- Gibson’s bottom-up theory

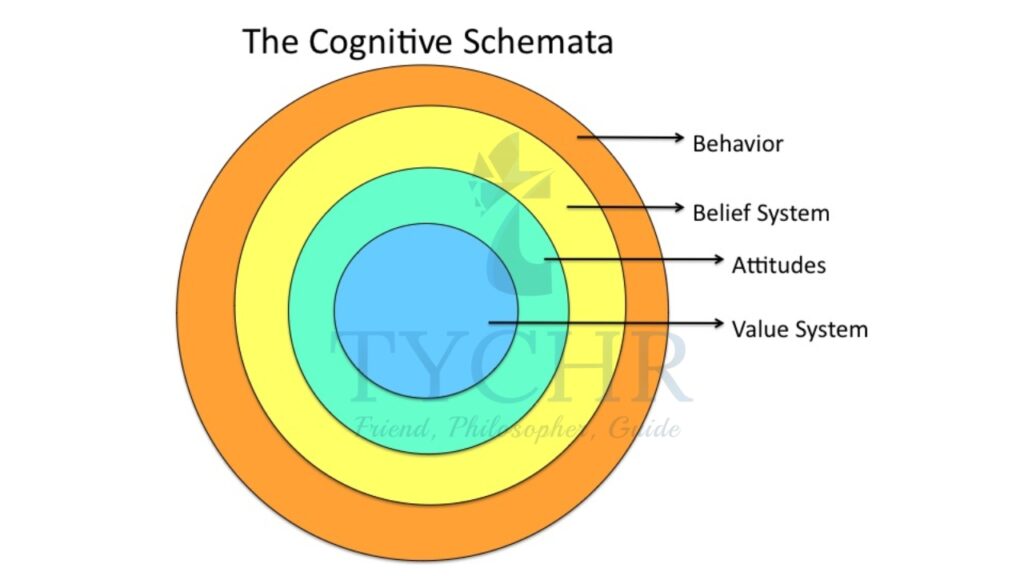

- A schema is a cognitive framework for structuring information about the physical world and the events and behavior occurring within it. Knowledge therefore becomes stored within memory in an organized way so that future experiences become perceived in preset ways. Schema processing generally does not require conscious thought and thus is a largely automatic process. Although this is beneficial to individuals in reducing the amount of cognitive energy used up. It has a large negative effect in creating biases in cognitive processes, such as thinking, perception and memory, which then limit an individual’s ability to think about, perceive or remember events in an accurate way.

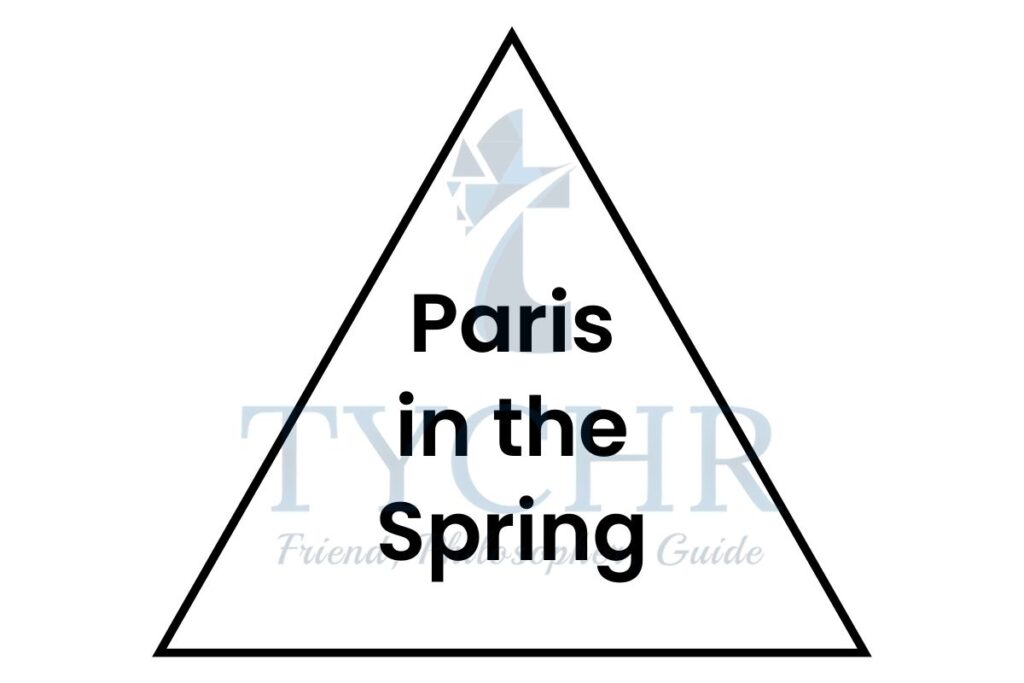

- A simple way to see how situations are perceived wrong as they do not fit what is expected, is to present the written statement ‘Paris in the spring’ to participants and ask them to state what they can see. Many participants continually read ‘Paris in the spring’ even though this is wrong, because that is what they think they should see, based on previous experiences of language.

- There are many special cases and types of schemas, depending on the particular aspect of human experience that is influenced by mental representations. For example, the following types of schemas have been proposed:

- Social- schemas :-mental representations about various groups of people, for example, a stereotype

- Scripts- schema :-s about sequences of events, for example, going to a restaurant or making coffee

- Self-schemas :- mental representations about ourselves.

1.) Social schemas

- One example that illustrates the effect of social schemas on our perception and interpretations is Darley and Gross (1983). In this study, one group of participants was led to believe that a child (a girl) came from a high socio-economic status (SES) background and the other group was told that the child came from a low-SES background. Both groups then watched the same video of the child taking an academic test. They were required to judge the academic performance of the girl. In accordance with the predictions of social schema theory, participants who thought that the a child who came from a high-SES environment gave considerably higher ratings for the academic performance of the girl in the video. This showed that pre-stored schemas (about what it means to be rich and what it means to be poor) were used as a lens through which the ambiguous information was perceived, and participants’ interpretations were changed accordingly.

2.) Scripts- schema

- A study by Bower, Black and Turner (1979) showed how scripts stored in our memory help us make sense of sequential data. The aim of the study was to see if in recalling a text, subjects will use the underlying script to fill in gaps of actions not explicitly mentioned in the text. The idea behind the study is as follows. Suppose an underlying abstract script exists for the following sequence of events: a, b, c, d, e, f, g. Now suppose there is a text that includes sentences corresponding to events a, c, e, g. If you present this text to a group of participants and ask them (after a series of filler tasks) to recall the text, they will probably “fill in the gaps”, that is, remember events b, d, f even though they were not originally in the text. This rests on the idea that we encode the text based on the underlying script, that is, we remember the generalized idea behind a text rather than the text itself.

3.) Self-schemas

- The concept of self-schemas is used extensively in Aaron Beck’s theory of depression. The negative self-schema that depressed people develop about themselves, and the corresponding automatic thinking patterns are, in this theory, the driving force of depression.

Schema theory and perception: top-down and bottom-up processing

- Top-down processing concerns cognitive analysis involving more than just the sensory information received by the brain. Bottom-up processing however concerns cognitive analysis involving only the sensory information received by the brain, without any further cognitive analysis.

- Top down and bottom up processing are probably best understood through reference to the cognitive process of perception, the interpretation of sensory data.

- Top-down processing (as described by Richard Gregory’s theory) best explains perception when sensory information is incomplete, through the use of schema to make inferences about our physical world.

- Bottom-down processing (as described by Jerome Gibson’s theory) best explains perception when sensory information is sufficient for perception to occur directly, without any need for the use of schema to facilitate higher level cognitive processing.

Gregory’s top-down theory

- Gregorv (1970) argued that perception is an unconscious ongoing process of testing hypotheses; an active search for the best interpretation of sensory data based on previous experience. The search is indirect, going beyond data provided by sensory receptors to involve processing information at a higher, ‘top-down’ cognitive level.

- Gregorv sees sensory information as weak, incomplete or ambiguous. For example, the retinal image of a banana cannot display its taste. Perception therefore isn’t directly experienced from sensations, but involves a dynamic search for the best interpretation of stimuli, with what is perceived being richer than the information contained within sensor data. The eye for Gregory is not a camera to view the world directv.

- Sensory data is often incomplete or ambiguous, and hypotheses are constructed about its meaning. For example, the experience of reversible figures, like being able to see a vase or two faces in Both are perceived, as Gregory believes separate hypotheses are generated and tested out, though usually, there would be enough sensory data to decide which interpretation of information is correct. Visual illusions are therefore experienced when confusions occur.

Evaluation of Gregory’s top-down processing theory

- Gregory’s theory increased the understanding of perception. generating interest and research and creating much evidence to support the theory.

- It seems logical that interpretations based on previous experience would occur when viewing conditions are incomplete or ambiguous. For example, if incomplete features indicated an animal could be a duel: or a rabbit. the fact it was on water would determine that it was a duck.

- Eysenck & Keane (1990) argue that Gregory’s theory is better at explaining the perception of illusions than real objects, because illusions are unreal and easy to misperceive. While real objects provide enough data to be perceived directly.

However, Gregory’s explanation of visual illusions is not without criticism, according to him. Once it is understood why illusions occur, perception should alter so the illusion is not experienced anymore. However, They still are weakening Gregory’s explanation. - Most research Supporting Gregory involves laboratory experiments. where fragmented and briefly presented stimuli are used. which are difficult to perceive directly. Gregory thus underestimates how rich and informative real-world sensory data can be. where it is possible to perceive directly. Peoples perceptions in general, even those from different cultures. are similar. This “avoid not be true if individual perceptions arose from individual experiences weakening support for Gregory.

- Gregory’s theory suggests that memory is constantly searched to find the best interpretation of incoming sensory data. This would be time-consuming and inefficient, casting doubt on Gregorys explanation.

Gibson’s bottom-up theory

- Gibson (1966) argued no more information was needed within the optical array{the pattern of light reaching the eyes for perception to occur directly, without higher-level cognitive processing. individuals’ movements and those of surrounding objects within an environment aid this process. This involves innate mechanisms that require no learning from experience.

- Gibson saw perception as resulting from the direct detection of environmental invariants, unchanging aspects of the visual world. These possess enough sensory data to allow individuals to perceive features of their environment. I like depth. distance and the spatial relationships of where objects are in relation to each other.

- Gibson believed that texture gradients found in the environment are similar to gradients in the eye, and these corresponding gradients allow the experience of depth perception. This grew into a theory of perception that includes the optical array, textured gradients. optic flow patterns, horizon ratio and affordances.

- Gibson believed perception was a bottom-up process. one constructed directly from sensory data. He saw the perceiver not as the brain but as an individual within their environment. The purpose of perception therefore is to allow people to Function in their environment safely and he argued that illusions were two-dimensional static creations of artificial laboratory experiments.

The optical array

- The optical array is the structure of patterned light that enters the eyes. It is an ever changing source of sensory information, occurring due to the movements of individuals and objects within their world. It contains different intensities of light shining in different directions. Transmitting sensory data about the physical environment. Light itself does not allow direct perception but the structure of the sensory information contained within it does. Movement of the body, the eyes anti the angle of gaze, and so on. continually update the sensory information being received from the optical array. The optical array also has invariant elements, providing constant sources of information, which contribute to direct perception from sensory information and are not changed by the movements of observers.

Optic flow patterns

- An unambiguous source of height data is optical flow patterns. distance and speed, which directly influence perception and serve as a rich and ever-evolving information source.

- ‘Opic flow’ refers to the visual phenomena continually experienced concerning the apparent visual motion that occurs as individuals move around their environment. Someone sitting on a train looking out of the window sees buildings and trees seemingly moving backwards: it is this apparent motion that forms the optic flow.

- Information about distance is also conveyed; distant objects such as hills appear to move slowly, while close-up objects seem to move quicker. This Depth one is known as [notion parallax.

- As speed increases. The optic flow also increases Optical flow also varies depending on the angle between the direction of movement of the observer and the direction of the observed object. When traveling forwards, the optic flow is quickest when the object being regarded is 90 degrees to the observer’s side, or when directly above or below. Objects immediately in front have no optic flow and seem motionless. However, the edges of such objects appear to move, as they are not directly in front and thus seem to grow larger.

Texture gradient

- Texture gradients are surface patterns providing sensory information about depth, shape, and so on. Physical objects have surfaces with different textures that allow direct perception of distance ,depth and spatial awareness. Due to constant movement, the ‘flow’ of texture gradients conveys a rich source of ever-changing sensory information to an observer ‘ for instance as objects come nearer they appear to expand

- Two depth cues central to the third dimension of depth being directly available to the senses are motion parallax and linear perspective. The latter is a cue provided by lines apparently converging as they get further away. These both permit the third dimension of depth to be directly accessible to the senses.

- Classic texture gradients include frontal surfaces, which project a uniform gradient, and longitudinal surfaces, like roads, which project slopes that decrease as the distance from the observer increases. Another type of invariant sensory information that enables direct perception is the horizon ratio. These relate to the position of objects relative to the horizon. The horizon ratio, which can be calculated by dividing the amount of the object above the horizon by the amount below it, is the difference between the sizes of objects at the same distance from the observer.

- Different-sized objects at equal distances from the observer present different horizon ratios while objects of equal size standing on level surfaces have the same horizon ratio. When nearing objects, they seem to grow, though the proportion of the object above or below the horizon remains constant and is a perceptual invariant.

Affordances

- Affordances involve attaching meaning to sensory information and concern the quality of objects to allow actions to be carried out on them (action possibilities). For instance, a cup ‘affords’ drinking liquids. Affordances are therefore what objects mean to observers and are related to psychological state and physical abilities. For an infant who cannot walk properly a mountain is not something he climbed.

- Gibson saw affordances as imparting directly perceptible meaning to objects. Because evolutionary forces shaped perceptual skills in such a way that learning experiences were not necessary.

- This rejects Gregory’s belief that the meaning of objects is stored in LTM from experience and requires cognitive processing to access.

Evaluation of Gibson’s bottom-up processing theory

- Gibson’s theory explains how perception occurs quickly. which Gregory’s theory cannot, though Gibson cannot explain why illusions are perceived. He dismissed them as artificial laboratory constructions viewed under restrictive conditions. But some occur naturally under normal viewing conditions.

- The idea that the optical array provides direct information about 1 what objects permit individuals to do (affordances) seems unlikely. Knowledge about objects is affected by cultural influences, experience and emotions. For example, how could an individual directly perceive that a training shoe is for running?

- Gibson and Gregory’s theories are similar in seeing perception as hypothesis-based. Gregory explains this as a process of hypothesis Formation and testing, with the flow of information processed from the top down, while Gibson sees it as an unconscious process originating from evolutionary forces, with the flow of information processed from the bottom up. Another comparability is that both concur that visual insight happens from oiled surfaces and light-reflected items, and that a particular natural framework is expected for discernment.

- Gregory’s and Gibson’s theories involve the nature versus nurture debate. Gregory’’s indirect theory emphasizes learning experiences and thus the influence of nurture while Gibson’s direct theory focuses more on nature’s role.

Module 2.5: Thinking and decision-making

What will you learn in this section?

- Normative models and descriptive models

- Normative models describe the way that thinking should be; they assume unlimited time and resources, examples: formal logic, theory of probability, utility theory

- Descriptive models describe thinking as it actually occurs in real life

- Macro-level decision-making models

- the theory of reasoned action (Fishbein, 1967) and the theory of planned behaviour (Ajzen, 1985)

- Micro-level decision-making mode

- The adaptive decision maker framework (Payne, Bettman and Johnson, 1993)

- Heuristics

- The function of thinking is to modify this information: we break down information into lesser parts (analysis), bring different pieces of information together (synthesis), relate certain pieces of information to certain categories (categorization), make conclusions and inferences, and so on. Unlike other cognitive processes, thinking produces new information. Using thinking, we combine and restructure existing knowledge to generate new knowledge. Thinking has been defined in many ways, including “going beyond the information given” (Bruner, 1957) and “searching through a problem-space” (Newell and Simon, 1972).

- Navigation is a mental interaction that includes picking one of the potential convictions or activities, for example picking between certain other options. It is closely related to thinking, because before we can choose, we have to analyze. Thus, thinking is an integral condition of every decision-making act.

The Relationship between Thinking and decision making :

- Thinking and decision-making are related cognitive processes that involve pondering on knowledge and information in order to select from options available. However, humans do not necessarily think in the most rational {sensible} way and thus do not necessarily make logical choices when making decisions.

- Hastie ISL Dawes [2001} argued that different sorts of people in very different types of situations actually think about making decisions in the same way. Which seems to suggest that people have a common set of cognitive processes. It is the limitations of these processes that restrict choice and can lead to illogical decisions being made.

- There are two basic types of theory concerning thinking and decision making:

- normative theories. which focus on how we should make decisions from a logical point of view.

- descriptive theories. which focus on how people actually make decisions.

Normative models

- The ideal way to think is outlined in normative models. They assume that there is no limit to the amount of time and resources needed to make a decision. They define what is right and wrong, correct and incorrect, effective and ineffective.

a) One example of a normative model of thinking is formal logic, as developed by Aristotle. The building block of the system of formal logic is a deductive syllogism: a combination of two premises and a conclusion (which follows from these premises). There is a set of rules that describes when syllogisms are valid and when they are not. For example:- (Premise 1) All men are mortal.

- (Premise 2) All Greeks are men.

- (Conclusion) Hence, all Greeks are mortal.

- This example is valid. Formal logic explains why ? In fact, there is even a name for this type of syllogism: Barbara. “A” stands for a general affirmative statement (“All men are mortal” is affirmative because it refers to all men). Since all three statements in the syllogism are of the same type, it gives us triple A, hence the name (bArbArA).

b) Another example of a normative economic model is the theory of probability. When we make investment decisions, we might go with our intuition, but the “normative” thing to do is to analyze the success or failure frequencies in the past for similar enterprises under similar circumstances, and then make decisions based on the likely outcomes projected from this analysis.

c) The normative model for decisions involving uncertainty and trade-offs between options is utility theory. According to this theory, the rational decision-maker should calculate the expected utility (the degree to which it helps us achieve our goals) for each option and then choose the option that maximizes this utility.

Descriptive models

- Descriptive models show what people actually do when they think and make decisions. They aim to accurately describe real-life thought patterns, and the main measure of the effectiveness of such models is how closely the model matches observed data from different samples of participants.

a) The theory of reasoned action (TRA)

- The purpose of the theory of reasoned action (TRA) is to explain how attitudes and actions relate in creation elections. Martin Fishbein proposed this theory in 1967. The theory’s main goal is to show that a person’s choice of a particular behavior is influenced by the expected outcome of that behavior.

- A predisposition known as the behavioral intention is created when we believe that a particular behavior will result in a particular (desired) outcome. The stronger the behavioral intention, the stronger the effort we put into implementing the plan and hence the higher the probability that this behavior will actually be executed.

- There are two factors that determine behavioral intention:

- attitudes :- Attitude describes your individual perception of behavior (whether that behavior is positive or negative

- subjective norms: – the subjective norm describes the perceived social pressure regarding this behavior (if it is socially acceptable or desirable).

- Depending on the situation, attitudes and subjective norms may have varying degrees of importance in determining intention.

b) The theory of planned behavior (TPB)

- In 1985 the theory was extended and became what is known as the theory of planned behavior (TPB).

- This theory introduced the third factor that influences behavioral intentions: perceived behavioral control

- This was added to explain situations in which the attitude is positive and subjective norms do not prevent you from behaving; however, you do not think you are capable of performing the action.

The information processing approach

- The information processing approach of thinking and decision making sees:

1. humans as very selective about which available information they attend to and how they use it

2. the acquisition and processing of information as having cognitive and emotional costs

3. humans as using heuristics (mental shortcuts) to select and process information

4. heuristics as chosen according to the nature of a decision to be made

5. beliefs and preferences as constructed through the decision making process.

The adaptive decision maker framework

- The adaptive decision maker framework (ADMF) is part of the IPA and focuses upon choice in decision-making. especially with preferential choice problems lathen no single option is best in all aspects of choice). Preferential choice problems are solved by acquiring and evaluating information about possible choices. Available options vary according to:

1. their perceived desirability

2. the degree of certainty of their value

3. the willingness of a decisionmaker to accept loss on one aspect of a choice in return for gain on another aspect

For example:-

- Siusaidh is fed up with living in the city and wants to move house. She must first decide on the desirability of the attributes to the decision-maker (which aspects of choice are important to her). For example, the house is in a rural location. within one hour of traveling to work. has an easy to maintain garden. It is in a Low Crime area and has a good school nearby. Other aspects are not as important such as the need for shopping facilities. pubs and restaurants and having a garage. Also, Some possible choices have unique aspects, such as one house that has a conservatory and another that has a pretty view.

- Then there is the degree of certainty of receiving an attribute value. This concerns the fact that some aspects are more certain than others. such as a house being in a rural location. But others are less certain. such as a garden being easy to maintain or how accurate the ratings of low crime and good local school are.

- There is also the willingness to accept loss on one attribute for gain on another, for example having to travel more than one hour to work in return for having a good school nearby.

- There are a number of strategies (heuristics) that Siusaidh could use to make a decision between choices involving several aspects. Which strategy is chosen depends on the demands of the task (such as the number of alternative choices of houses) how accurate a decision must be in terms of important aspects and individual differences {such as how important the decision making is to Siusaidh. Some strategies might use all information. others only selected information. Some might focus on possible house choices. processing each house in turn. while other strategies may consider all possible houses in one aspect. before considering them in another aspect.

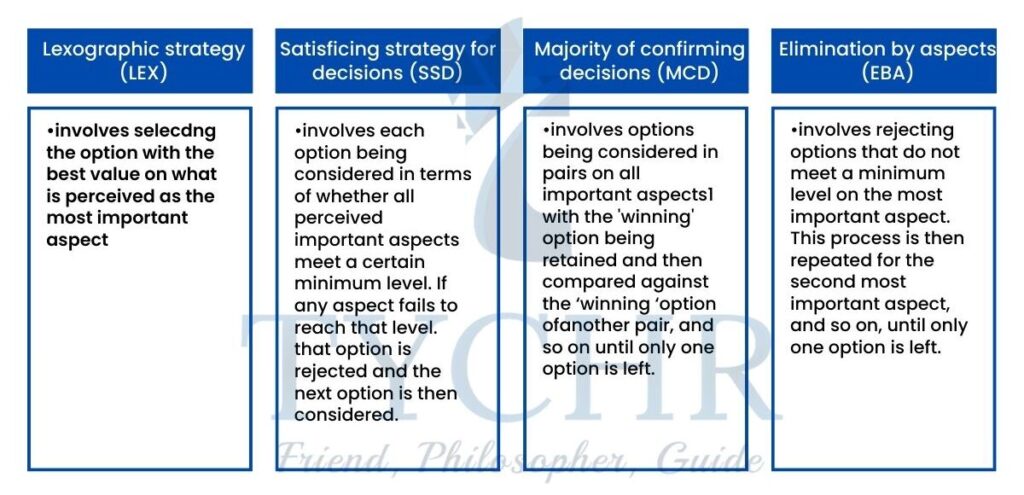

Heuristics - Heuristics involve using simpler decision making processes. Such processes involve less cognitive effort by only focusing on some relevant information. This little to less logical decisions being made especially when there are a greater number of important aspects to consider. Several types focus on one aspect of a of heuristics have been identified:

- Let’s consider a hypothetical example. Imagine you are planning to meet some friends (some of whom are bringing children) at a restaurant and you are choosing from five options and against five attributes quality of food, price, and so on). Each attribute may have one of three possible values: “bad”, “average” and “good”.

ATTRIBUTES | ||||||

Quality of food | Price | Distance from home | Catering to variety of dietary needs | Playroom for children | ||

Southern Sun | Good | Average | Good | Bad | Bad | |

Northern Wind | Bad | Good | Average | Average | Bad | |

Global Junction | Average | Good | Bad | Bad | Average | |

Eastern Delicacy | Good | Average | Average | Good | Bad | |

- If you are using the WAD strategy, assign numerical values to attributes (for example, bad = 1, average = 2, good = 3) and then calculate the weighted sum of attributes for each of the alternatives. The score you get for “Southern Sun” is 3 + 2 + 3 + 1 + 1 = 10. The restaurant that will score the highest is “Eastern Delicacy” (2 + 3 + 2 + 3 + 1 = 11). So this is the one you will choose.

- If you are using the LEX strategy, first decide which of the attributes is most important to you (say, playroom for children) and then pick the best alternative for that attribute (in our case, “Global Junction”).

- In SAT, decide on a cut-off score (for example, you decide that all attributes in the best choice should be at least average) and look for the option that satisfies this condition. If no such option is found (as is the case in the current example, since each of the alternatives scores “bad” for at least one of the attributes), relax the condition. For example, you might decide that the attribute playroom for children does not necessarily have to be good or average, and now “Eastern Delicacy” would satisfy your new condition and be the restaurant of choice.

- In EBA, your thinking might be like this. Important attributes should be at least “average”. The most important attribute is “quality of food”. So you eliminate “Northern Wind”. The second most important attribute is “catering to a variety of diets”. So you eliminate three more restaurants. Only “Eastern Delicacy” is left, and this is the restaurant you choose.

- Strategies like WADD and SAT are called alternative-based, because you are considering different attributes for the same alternative. Strategies like LEX and EBA are attribute-based, because you select an important attribute and compare different alternatives against this attribute.

Module 2.6: Cognitive processes’s reliability : reconstructive memory

What will you learn in this section?

- Unreliability of memory

- Schema influences what is encoded and what is retrieved

- Memories can be distorted

- The theory of reconstructive memory: postevent information may alter the memory of an event

Memory’s unreliability

- We have already considered the way that schemas may influence memory processes at all stages of information processing, including encoding and retrieval.

- Studies like Anderson and Pichert (1978) show that schemas can determine what you do and do not remember even after the information has been coded and stored in the long-term memory. Depending on the schema you are using, you will find it easier to recall some details.

- This shows one memory of the limitations to reliability of memory: retrieval of information from LTM may depend on whether or not you are using a particular schema. This is why we sometimes find it difficult to recall things, but then they “jump back” to us when the context changes and something in the new context triggers those memories.

- However, the fact that retrieval (or non-retrieval) of information depends on schemas in use is only one dimension of unreliability of memory. Another dimension is the tendency of memory to be distorted.

The theory of reconstructive memory and eyewitness testimony

- There’s a theory that proposes that memory, rather than being the passive retrieval of information from long-term storage, is an active process that involves the reconstruction of information, the theory of reconstructive memory. Reconstruction literally means that you construct the memory again.

- In order to see the extent to which memories can be altered by irrelevant external influences, Loftus and Palmer (1974) conducted their famous study on eyewitness testimony.

- It is important to note that the study actually consisted of two parts—experiment 1 and experiment 2—because there were two competing hypotheses.

- In experiment 1, 45 students were split into 5 groups and shown film recordings of traffic accidents (each participant was shown 7 films). The order in which the films were shown was different for each participant. Following each film, participants were given a questionnaire asking them to answer a series of questions about the accident. Most of the questions on the questionnaire were just meant as distractions, but there was one critical question that asked about the speed of the vehicles involved in the collision. This question varied among the five groups of participants: The question, “About how quickly were the cars going when they hit each other?” was put to each group. Additionally, the term “hit” was substituted for “smashed,” “collided,” “bumped,” or “contacted” for the other groups.Results showed that the mean speed estimates varied significantly for the five groups:

- The crucial point is that all participants watched the same films, and yet they gave significantly different mean speed estimates.

- Loftus and Palmer suggested that this finding could be interpreted in two possible ways.

1. Response bias: for example, a subject might not be sure if it has to say 30 or 40 mph,and a verb of a higher intensity (such as “smashed”) biases the response to a higher estimate. Memory of the event in this case does not change.

2. Memory change: the question leads to a change in the memory of the subject representation of the accident. - For example, the verb “smashed” actually alters the memory so that the subject remembers the accident as having

been more severe than it actually was. - To choose between the two competing explanations, Loftus and Palmer conducted experiment 2. The rationale behind the second study was that if memory actually undergoes a change, the subject should be more likely to “keep in mind” other details (that did not happen, but fit well into the newly constructed memory).

- In experiment 2, 150 students participated. They were shown a film depicting a multiple-car accident. Following the film, they were given a questionnaire that included a number of distractor questions and one critical question. This time participants were only split into three groups: “smashed into each other”, “hit each other” and a control group (that was not asked the critical question).

- One week later the subjects were given a questionnaire again (without watching the film). The questionnaire consisted of 10 questions, and the critical yes/no question was “Did you see any broken glass?” There had not been any broken glass in the video. Results showed that the probability of saying “yes” to the question about broken glass was 32% when the verb “smashed” was used, and only 14% when the word “hit” was used (which was almost the same as the 12% in the control group). So, a higher-intensity verb led both to a higher speed estimate and a higher probability of recollecting an event that had never actually occurred.

- Based on this result, the authors concluded that the second explanation for experiment 1 should be preferred: an actual change in memory, not just response bias!

- In line with the theory of reconstructive memory, Loftus and Palmer suggest that memory for some complex event is based on two kinds of information:

1.) information obtained during the perception of the event and

2.) external post-event information. - Over time, the information from these two sources becomes integrated in such a way that we are unable to distinguish them.Applied to the study, this means that subjects who were given the question with the verb “smashed into” used this

verb as post-event information suggesting that the accident had been severe. - This post-event information was integrated into their memory of the original event, and because broken glass is comparable to a severe accident, these subjects were more likely to think they saw broken glass in the movie. These findings can also be interpreted from the perspective of schema theory: the high-intensity verb “smashed” used in the leading question activates a schema for severe car accidents. Memory is then reconstructed through the lens of this schema.

- Another argument against applicability of these results to natural conditions is that real-life eyewitness testimony often involves recognition recognizing a stimulus as something you had already seen) rather than recall (in the absence of a stimulus). Eyewitness testimony is often required in recognizing individuals suspected of committing a crime. Also in the previous example we saw that leading questions (with verbs of varying emotional intensity) may provide post-event information that contributes to reconstructive memory. In real-life situations, however, post- event information might take more aggressive forms, for example, providing a person with misleading information. This might happen in police interrogations or in the presence of other connecting testimonies.

Module 2.7: Biases in thinking and decision-making

What will you learn in this section?

- Dual theory :- System 1 and system 2 thinking

- Heuristics are cognitive shortcuts or simplifed strategies; heuristics lead to cognitive biases which may be identified by comparing the decision to a normative model

- System 1: immediate automatic responses;

- System 2: rational deliberate thinking (Daniel Kahneman)

Dual Theory

- Part of the lPas the dual process model (DPA) sees thinking as occurring on a continuum, from

1.) System 2 (intuitive mainly unconscious) through to

2.) System 2 (analytic controllable, conscious} thought. The - lPA was initially focused more on System 2 thinking, but now also focuses on the effect System 1 thinking has upon System 2 thinking when making decisions.

- System 1 thinking occurs without the use of language and gives a feeling of certainty. It permits quick, automatic decision making that involves little cognitive effort. System 1 thinking generally occurs when an individual has to make a decision quickly or cannot expend much cognitive effort on a decision, as cognitive energy is simultaneously being used elsewhere (like when paying attention to driving a car and being asked to make a decision about food choices}.

- System 2 thinking, however is limited by working memory is role based, develops with age. uses language in its operation and does not necessarily give feelings of certainty. System 2 thinking involves high level information processing activities that require attention and which characterize most decisions rating 1 hut System I thinking, which occurs below the level of consciousness, is seen as having an effect on System 2 thinking.

- The correction model argues that initial decisions occur quickly through System 1 thinking, with this decision either being expressed immediately, or confirmed and corrected by System 2 thinking. In this way, System 2 thinking is seen as a check upon System I thinking.

- Research has surprisingly suggested that unconscious thought (System 1) produces better decisions compared to conscious thought (System 2). This may be because unconscious thought is an active (ever changing process that organizes information in memory in ‘clusters’ that relate to a specific choice option and which clearly separate out different choice options from each other.

- Evolution has equipped humans with a range of cognitive skills to deal effectively with the environments they find themselves in. These include heuristics (mental shortcuts) that allow individuals to make quick decisions when deeper analysis is not possible. However, such thinking strategies are irrational as they contain cognitive biases (illogical,systematic errors in thinking gSelf-serving bias) that negatively affect decision-making}. There are several ways in which cognitive biases can exert an influence on thinking and decision making:

1.) Framing effects

how a situation is perceived can affect the way in which a decision will he made, for example,in positions of loss or gain, like when gambling if an individual perceives themselves as in a position of gain (7 having previously won a bet) they may have a reduced chance of taking risks (such as then making a large bet or betting on an unlikely outcome for a big gain). If they perceive themselves in a position of loss (having previously lost a bet} they may take risks to recover losse.

- Depending on whether outcomes are described (“framed”) as gains or losses, subjects give different judgments: they are more willing to take risks to avoid losses and have a tendency to avoid risk associated with gains. In other words, “Avoid risks, but take risks to avoid losses”. This is known as the framing effect.

The prospect theory proposes a mathematical way to describe such deviations from the normative model. In terms of the normative model, it does not matter where the reference point is: the value function should be a straight line.

However, people assign less (positive) value to gains and more (negative) value to losses. We selectively redistribute our attention to the potential outcomes based on how the problem is framed.

2.) Use of information

how information is used can be biased in several ways. Information that is easily available may be paid more attention to. while information which is easily memorable (usually because it has an emotional or personal connection) is seen as more important. Also, information that displays an individual in a positive way will he favored (self-serving bias) as will information that supports an established belief (confirmation bias);

- For example,an individual with certain political views may seek out the opinions of others with similar views which only has the effect of them backing up that person’s views.

3.) Judgment

due to the sheer amount of sensory information around us, humans have to be selective in what they pay attention to. However, this happens in a one sided way for two reasons.

a) We like to trust our judgments and this results in us not paying attention to any potential sources of uncertainty.Any potentially problematic or worrying information tends to get ignored

b) There is insufficient anchoring judgment, which means not updating our decisions as new information becomes available.

c) Once the first judgment is made, it acts as a “mental anchor”, a source of resistance to changing our decision. This leads us to ignore any information that is inconsistent with the original judgment.

4.) Post- decision evaluation

Because maintaining self-respect (self-worth) is important to us. We do not pay attention to information that can show us negatively. This includes basic attribution bias, the tendency to see favorable outcomes as the result of actions within our control and unfavorable outcomes as caused by factors beyond our control. This form of bias therefore helps protect us from losing self-esteem. In addition, people like to feel that they have an influence on external events. so we distort our understanding of such events so that we believe we have more control over them than we actually do. This illusion of control can lead to superstitions, such as believing that if you wear your lucky yellow pants, your football team will win. This leads to us underestimating how bad our decisions can be and prevents us from learning from the experience. due to ottr ignoring information that suggests we have no control over events. Hindsight bias sometimes known as I-know-it-along-effect, is the phenomenon of seeing an event as predictable even though there was no information at all to predict that event.

Module 2.8: Emotion and cognition

What will you learn in this section?

- Theories of emotion: a gradual shift of emphasis from bodily responses to cognitive factors

- Darwin (1872): Emotions are vestigial patterns of action

- James–Lange (1884): theory of emotion

- Cannon–Bard (1927): theory of emotion

- Schachter and Singer (1962): two-factor theory of emotion

- Lazarus (1982): initial cognitive appraisal

- LeDoux (1996): two physiological pathways

- The theory of flashbulb memory

- The Influence of emotions on

- Perceptions

- Memory

- Goals and decison making

- Emotion is a state of mind that is determined by one’’s mood. Emotions are seen as evolved, as they have an adaptive survival value in helping shape human reactions to mood environmental stimuli.

- This has two implications, both of which were reflected in later theories of emotion.

- Emotional response is caused by a stimulus.

- Emotional response results in behavior.

- Apparently the stimulus has personal significance on our well-being, so the brain reacts to the stimulus with a special mechanism that encourages certain action.

Theories of emotion

1.) Darwin (1872): Emotions are vestigial patterns of action

- In 1872 Charles Darwin published the book “The Expression of the Emotions in a Man and Animals” IN which he claimed that emotions have an evolutionary meaning (either communication or survival) and can actually be nothing else but vestigial patterns of action. This goes well with the etymology of the word emotion (from “move”).

- For example, one of the current evolutionary explanations of the sweaty palms that we get when we experience a sudden fear is that sweat on the palms makes them softer and therefore enhances the grip on the bark when climbing up a tree. We might have inherited sweaty palms from our monkey ancestors who rushed to trees and climbed up in a moment of danger. Arguably, if emotions have an evolutionary basis and developed as certain adaptive actions, emotions should be expressed similarly across cultures. This idea has found support in a number of studies and observations.

- For exam Interestingly, blushing covers a larger area of the body in populations that usually expose more skin (such as primitive tribes), but in modern societies it is mainly limited to the face and neck. ple, Darwin himself gives the example of blushing: blushing is characteristic of people of all cultures and all ages.

2.) James–Lange (1884): theory of emotion

- The James–Lange theory of emotion (proposed simultaneously by William James and Carl Lange in 1884) claimed that external stimuli cause a physiological change and the interpretation of this change is emotion. In other words, first you see a snake, then it produces certain physiological changes in your body (release of adrenaline, increased heart rate and so on) and then your mind interprets these changes as an emotion. You are afraid because your heart is beating fast; you feel happy because you smile; you feel sad because you cry.

- his theory seems somewhat counter-intuitive, if not primitive, but modern neuroscientific evidence actually supports some of its claims, especially the fact that feedback from bodily changes may play its role in modification of emotional responses

3.) Cannon–Bard (1927): theory of emotion

- The Cannon–Bard theory of emotion (1927) was based on a number of animal studies that showed that sensory events could not directly cause a physiological change without simultaneously triggering cognitive processing. It was proposed that emotional stimuli cause two parallel processes at the same time: a physiological response and a conscious experience of emotion. In other words, your conscious experience of emotion is not a result of the bodily change—it is a process that accompanies it.

4.) Schachter and Singer (1962): two-factor theory of emotion

- Schachter and Singer (1962) claimed that emotions were a result of two-stage processing: first physiological response, then cognitive interpretation For example, you see a potentially threatening stimulus (snake) and it triggers a physiological response (heart palpitations). Then your brain quickly scans the surroundings for possible explanation of this palpitation. When the brain notices a snake, it cognitively interprets the heartbeat as fear, and then fear is experienced as a conscious emotion. The advantage of this theory is that it provides an explanation for why we can feel different emotions, such as tears of sadness and tears of joy, under the same physiological circumstances.

.

5.) Lazarus (1982): initial cognitive appraisal

- In his theory of appraisal, Lazarus shifted cognitive interpretation to the first stage of emotional processing, claiming that initial cognitive appraisal precedes physiological changes. The theory asserts that the quality and intensity of emotions are controlled through this process of the initial cognitive appraisal.

6.) LeDoux (1996): two physiological pathways

- Much light on the nature of emotions was shed by research into their biological underpinnings. Joseph LeDoux (1996) studied fear conditioning in rodents and looked at the sequence of stages the brain goes through when processing fear stimuli. The amygdala performs a key function in fear processing. LeDoux describes two pathways, or sensory roads, to the amygdala.

a)The fast pathway leads from perceiving the stimulus (sensory input) to thalamus and then amygdala, producing an emotional response.

b)The slow pathway leads from the stimulus to thalamus, then primary sensory cortex, association cortex, hippocampus, amygdala, and the response. In other words, in the slow pathway information travels additionally via the neocortex and hippocampus. - The idea of this theory is that our brain simultaneously produces a quick, direct emotional reaction and triggers a more sophisticated process of cognitive interpretation. On the one hand, the quick pathway prepares us for possible danger. On the other hand, the long pathway may process information more deeply and modify the initial emotional response.

- For example, if you walk along the street and suddenly see an aggressive dog barking at you, your heart rate will increase, your adrenal glands will release adrenaline into the bloodstream, and your body will quickly enter a state of alertness. However, when you notice a second later that the dog is behind a tall fence, you will relax and the initial bodily changes will gradually fade away.

The theory of flashbulb memory

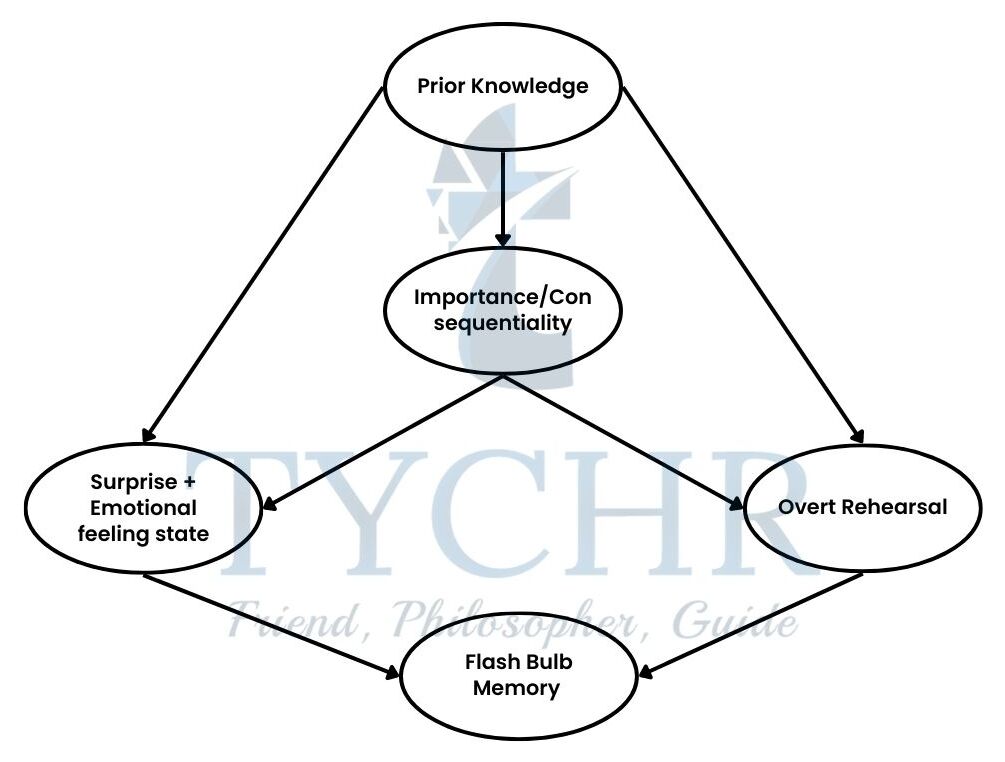

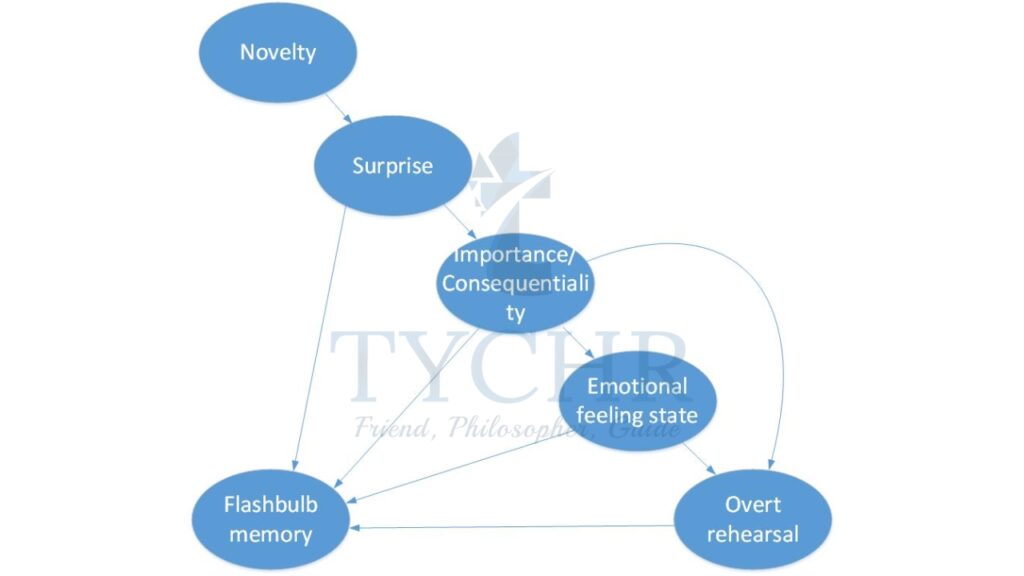

- The flashbulb theory of memory was proposed by Roger Brown and James Kulik in 1977.AAccording to them, flashbulb memories are vivid memories of the circumstances under which an unexpected and upsetting event was first learned of. They are like a “snapshot” of a significant event. An example that these authors used as a starting point in their investigation was the assassination of John F Kennedy in 1963. They observed that people generally had very vivid memories about the circumstances of first receiving the news about the assassination; they seemed to clearly remember where they were when they heard the news, who told them, what they were doing, what happened next, the weather, and the smells in the air

- To further research the determinants of this phenomenon they gave 40 Caucasian and 40 African Americans ranging from 20 to 60 years old a questionnaire asking them about other assassinations as well as other socially and personally significant events.

- Answers were submitted in the form of free recall of unlimited length. It was found that a lot of other events also created vivid flashbulb memories. However, there were two variables that had to attain sufficiently high levels in order for flashbulb memory to occur: surprise and a high level of personal consequentiality (which causes emotional arousal). If these variables reach a sufficient level, this triggers a maintenance mechanism: overt and covert rehearsal that strengthens the degree of elaboration of the event in memory.

- So the theory posits two separate, but related mechanisms of flashbulb memories:

a) formation and

b) maintenance.- The mechanism of emergence is the photographic representation of events that are surprising and personally connected, and therefore emotionally arousing Brown and Kulik used evolutionary arguments to explain the existence of such a mechanism: personally consequential events have high survival value so humans could have developed a special memory process to deal with them.

- The mechanism of maintenance includes overt rehearsal (conversations with other people in which the event is reconstructed) and covert rehearsal (replaying the event in one’s memory). Naturally, rehearsal consolidates memory traces and the memory is experienced as very vivid even years after the event.

Influence of Emotions

Emotion and perception

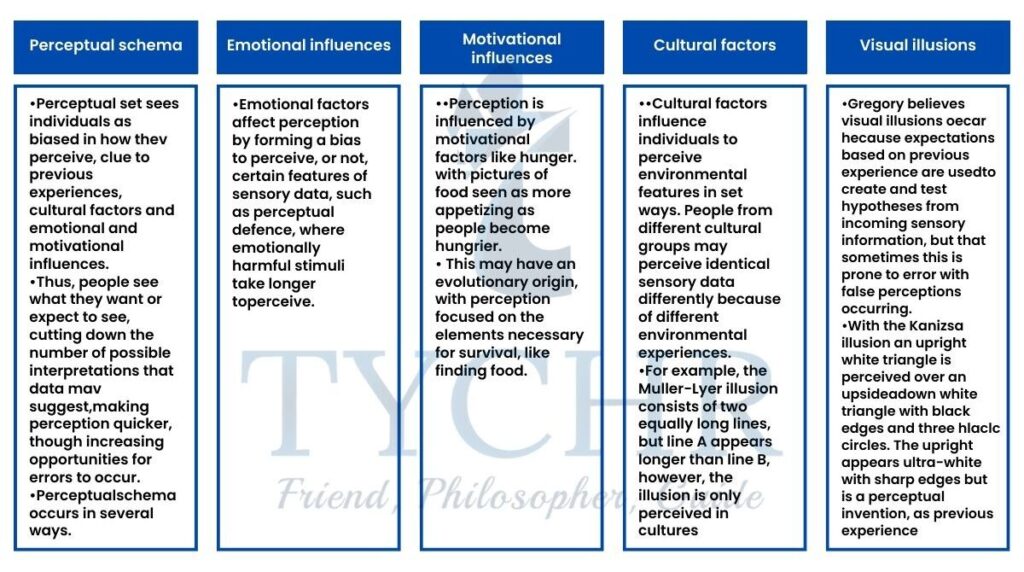

We know that people’s perceptions can be skewed by schema, which causes them to see only what they want to see based on their expectations, past experiences, culture, and so on. Emotional factors, which create a bias to perceive (or not perceive) certain features of incoming sensory data, are another way that schemas influence perception. An important factor here is perceptual defense, where emotionally threatening stimuli take longer to perceive.

Emotion and memory

- Memory can be affected by schema where individuals reconstruct memories according to which particular schemas they are using at the time of recall,

- for example, through misleading inform-ationiquestions. post-event information, confabulation,and so on. Memory can also, though, be affected by emotional factors in a number of ways, such as through flashbulb memory, repression and contextual emotional effects. Emotion seems to affect memory at all stages of the process: encoding, storage and retrieval.

- Emotionally arousing stimuli have also been seen to lead to amnesia. even though no physical damage to brain structures associated with memory have occurred. This can occur as retrograde amnesia where memories occurring before a traumatic event are lost or anterograde amnesia where new memories cannot be created after experiencing an emotional event.

The effects of emotion on memory as a process

1. Encoding

- Encoding concerns the means by which information is represented in memory. The cue-utilization theory argues that high levels of emotion focus attention mainly on the emotionally arousing elements of an environment and so it is these elements that become encoded in memory and not the non emotionally arousing elements.

- Emotional arousal may also increase the length of time attention is focused on emotionally arousing elements of an environment which aids the encoding of such elements.

2. Storage

- Storage relates to the number of information that can be retained in memory. Emotional arousal can increase the consolidation of information in storage making such memories longer lasting.

- This is not an immediate effect as emotional arousal seems mainly to enhance memory after a delay in time possibly because the hormonal processes involved in memory consolidation take a little time and because emotional experiences incur elaboration (creating links between the new information and previously stored information).

3. Retrieval

- Retrieval concerns the reconstruction of memories with this process influenced by contextual emotional effects. which relates to the degree of emotional similarity between the circumstances of encoding and the circumstances of retrieval, through either the mood-congruence effect or mood-state dependent retrieval. These effects occur because when information is encoded. An individual not only stores the sensory aspects of the information (what it looks like, sounds like.etc) they also store their emotional state at that time.

- The ability of people to retrieve information with the same emotional content as their current emotional state is referred to as the mood congruency effect.

- Mood-dependent retrieval refers to the ability of individuals to retrieve information more easily when their emotional state at the time of recall is similar to their emotional state at the time of encoding.

Emotions and goals in decision-making

- Originally the ADMF focused on the accuracy of decision making and the amount of cognitive effort in decision making but the ADMF was later extended to include the role of emotion and goals (desired outcomes of a decision) as these can greatly affect decision-making.

- Choice goals framework for decision-making

There are several important factors when deciding between available choices:- maximizing accuracy of decision making

- minimizing cognitive effort

- minimizing negative emotions while making decisions and afterwards

- maximizing the ease of justifying a choice to yourself and others.

- The importance of these factors varies with different choice-making situations.

- For example if it is important to be able to justify a decision, then this may lead to using a decision malting strategy that clearly shows the similarities and differences between available options.

- When decision-making involves negative emotions, simpler decision making strategies are often selected that lead to cognitive performance being worsened through decisions taking longer to arrive at and by an increased risk of error in choice occurring.

- Negative emotions can especially be amused when a tradeoff has to be made between two highly valued options; for example, painful surgery, that may improve health versus poor health, but without enduring painful surgery. In such situations, individuals will often avoid decision making strategies involving trade offs, for example, making no decision at all. This is an example of emotion focused coping.

Coping theory

- Folkman 131 Lazarus (1988) argue that individuals respond to negative emotions in one of two ways:

- Problem focused coping:- negative emotions are seen as indicating how important a decision is to an individual, so they concentrate on making the best choice and avoid being influenced by the experience of negative emotions.

- Emotion focused coping- an individual focuses more on minimizing negative emotions rather than the quality of decision making; for example, refusing to make a choice, getting someone else to make the choice, avoiding the distressing aspects of a choice, making a choice that is easier to justify, and so on. Negative emotions can be generated when tradeoffs between two desirable options have to be made; for example whether to allow fracking, which may have the desirable outcome of generating money for a community, but may have the negative outcome of damaging the environment.

Module 2.9: Cognitive processing in the digital world

What will you learn in this section?

- The influence of digital technology on cognitive processes

- The positive and negative effects of modern technology on cognitive processes

- Methods used to study the interaction between DTs and Cognitive processes

The impact of digital technology on cognitive processes

- Digital technologies {DTs} consist of electronic devices and systems that generate. process and store data. DTs include social media such as Facebook, online gaming, such as Minecraft Applications (apps) and mobile devices. Such technologies are ever changing as the digital world tends to develop very quickly.

- In recent years digital technology has come to greatly affect many individuals lives, leading to the formation of what has been called the ‘Information Society’. Psychologists are thus DTs affect a 1rvide range of cognitive processes. Psychologists are interested in the effects they have on attention, especially the effects of multitasking (for example. using DTs in lectures).

- Indeed the myth of multitasking, which is the false belief that humans can simultaneously perform cognitive tasks. suggests that humans do not perform several tasks simultaneously, they just switch from one task to another very quickly, which expends cognitive energy and leads to errors being made. Cognitive psychologists are also interested in the effects of DTs on learning processes and memory.

The positive and negative impacts of modern technology on cognitive processes

Positive effects

- There are some positive effects of DTs on cognitive processes, though only if DTs are used in specific ways. For example, DTs seem to work best when used by small groups rather than by individuals, as group usage stimulates deeper-level analysis of material than individual use does.

- DTs also seem to aid learning more when used in a short and focused way and can be especially beneficial to lower- attaining students and those with learning disorders or from disadvantaged backgrounds, as they can provide intensive support to aid learning.

- DTs seem to be most beneficial when used as a support for learning, rather than the sole means of learning, and in certain subjects, like mathematics. where deeper levels of cognitive processing may not be necessary.

- Overall, DTs improve learning if they are focused on assisting what is to be learned rather than being seen as a means of learning in themselves.

Negative effects

- Although DTs present new opportunities for enhanced learning, through use of the internet, and so on, concern has arisen that such technologies also have negative effects, for example increases in Attention Deficit Hyperactivity Disorder (ADHD) and Internet Addiction Disorder as well as decreasing cognitive performance, hy overloading visual working memory. Concern has also grown that younger individuals, who have grown up only knowing DTs, are increasingly unable to use other forms of perceptual media effectively, such as reading books and magazines.

- The relatively recent growth of computer usage has led to changes in cognitive functioning. Use of computers can quickly lead to an overload of visual working memory and thus a decrease in an individual’s cognitive performance.

- DTs may also lead to individuals who are easily distracted from learning tasks and who possess short attention spans, with inferior writing skills, due to the predominant use in digital media of short snippets of information. This has a negative effect on the development of critical skills with students conditioned to give easy, quick answers rather than considered viewpoints. Students who engage in digital multitasking. such as accessing their phones or emails while in lectures, tend to get lower test scores.